Image credit: Lee et al., NeurIPS Workshop 2021

Image credit: Lee et al., NeurIPS Workshop 2021

Abstract

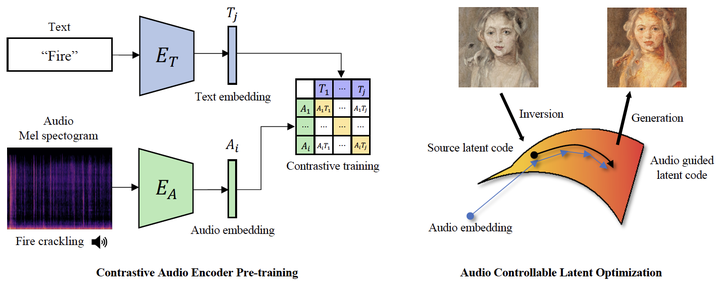

There has been a long attempt to transfer the field of art such as painting to computer-based creation. In contrast to realism, non-photorealistic rendering (NPR) area, in particular, has focused on creating artificial style rendering for painting, drawing, and cartoon. With the advanced development of generative models, impressive computer-generated paintings based on artistic style learning approach have been appeared. On the other hand, researchers started to propose methods to produce creative painting beyond simply adopting the artistic style from other masterpieces. This includes utilizing auditory and visual sources to manipulate original masterpiece with novel style. Recent work manipulates an original masterpiece to integrate semantic understanding from audio source through audio-visual correlation learning. In previous works, researchers proposed audio-reactive interpolation method based on musical features and latent transfer mapping of deep music embeddings to style embeddings for video/image generation. However, previous approaches mainly utilized features and attributes of music, which were hard to convey semantically meaningful image manipulation from given audio source. We propose extending the domain of StyleCLIP to audio in order to overcome abovementioned limitation. StyleCLIP carried out text-based image manipulation by mapping latent feature of text to that of image. In our case, we replace text with audio to design audio-based semantic image manipulation. In particular, we encode both audio and image into the same latent space. This allows us maximizing advantages of both CLIP (effective representation of similarity) and StyleGAN (high-quality image generation). In this work, we introduce semantic audio-reactive painting generation model. We believe that our proposal has a high potential in creation of new field of computer art by narrowing down the gap between music and art, which were regarded as different fields of art.