Abstract

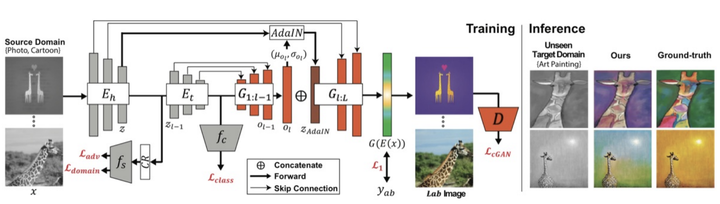

We propose a novel automatic colorization technique that learns domain-invariance across multiple source domains and is able to leverage such invariance to colorize grayscale images in unseen target domains. This would be particularly useful for colorizing sketches, line arts, or line drawings, which are generally difficult to colorize due to a lack of data. To address this issue, we first apply existing domain generalization (DG) techniques, which, however, produce less compelling desaturated images due to the network’s over-emphasis on learning domain-invariant contents (or shapes). Thus, we propose a new domain generalizable colorization model, which consists of two modules (i) a domain-invariant content-biased feature encoder and (ii) a source-domain-specific color generator. To mitigate the issue of insufficient source domain-specific color information in domain-invariant features, we propose a skip connection that can transfer content feature statistics via adaptive instance normalization. Our experiments with publicly available PACS and Office-Home DG benchmarks confirm that our model is indeed able to produce perceptually reasonable colorized images. Further, we conduct a user study where human evaluators are asked to (1) answer whether the generated image looks naturally colored and to (2) choose the best-generated images against alternatives. Our model significantly outperforms the alternatives, confirming the effectiveness of the proposed method.