Text-Driven Prototype Learning for Few-Shot Class-Incremental Learning

Abstract

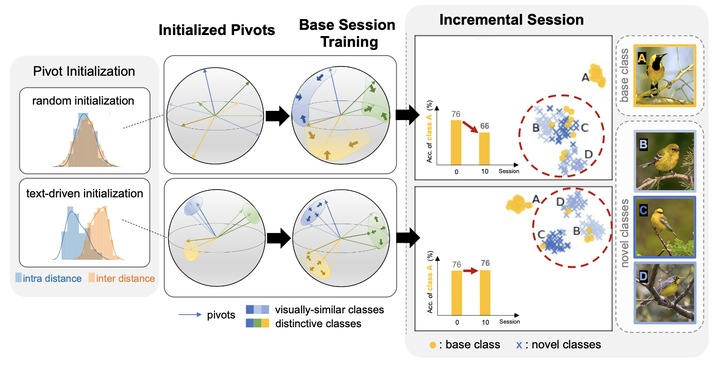

Few-shot class-incremental learning (FSCIL) aims to learn generalizable representations with large amounts of initial data and incrementally adapt to new classes with limited data (i.e., few-shot). Recently, prototype-based approaches have shown notably improved performance. However, there still remain challenges – their performances often degrade when newly added classes have high similarity with previously seen classes, causing prototypes to be indistinguishable. In this work, we advocate for leveraging textual semantics to learn class-representative and class-distinguishable prototypes, retaining semantic relations between classes. We utilize angular margin loss to leverage textual semantics effectively, encouraging the model to have intra-class compactness and inter-class discrepancies in the embedding space. Our experiments with three public benchmarks (CUB200, CIFAR100, and miniImageNet) show that our proposed method generally matches or outperforms the current state-of-the-art approaches. To further demonstrate the effectiveness of using texts in the FSCIL task, we newly collect visually descriptive and class-discriminative descriptions built upon two widely-used FSCIL benchmarks, i.e., CIFAR100-Text and miniImageNet-Text. These new collections will be publicly available upon publication.