Leveraging Inductive Bias in ViT for Medical Image Diagnosis

Abstract

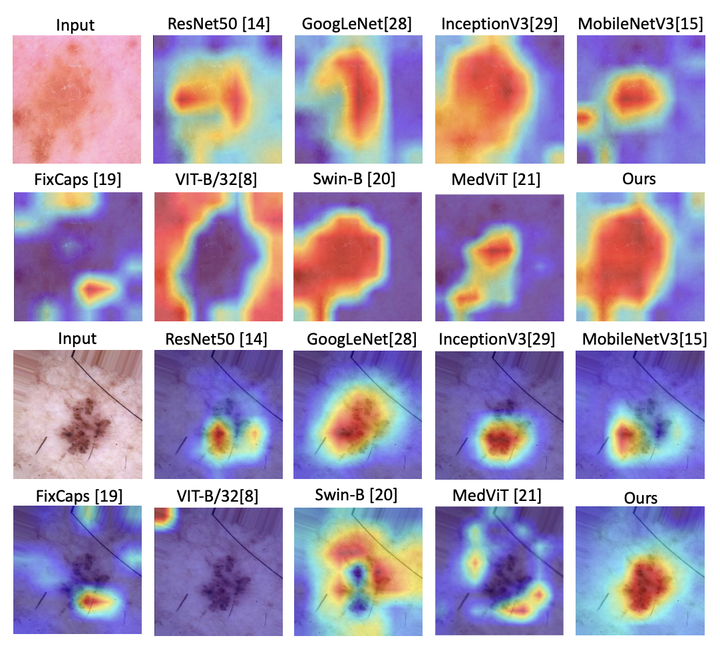

Recent advances in attention-based models have raised expectations for an automated diagnosis application in computer vision due to their high performance. However, attention-based models tend to lack some of the inherent assumptions for images, known as inductive biases, which convoultional-based models possess. Herein, we customize a vision transformer (ViT) model to enhance the performance with exploiting locality inductive biases for limited medical images. Specifically, using the ViT model as a backbone, we propose shift window attention (SWA), deformable attention (DA), and a convolutional block attention module (CBAM) to leverage the convolutional neural networks' inductive bias towards locality, thereby improving both global and local context of the lesion. To evaluate the effectiveness and efficiency of our proposed method, we use various publicly available well-known medical images diagnosis such as HAM10000, MURA, ISIC 2018 and CVC-Clinic DB for classification or dense prediction tasks. Experimental results show that our method significantly outperforms the other state-of-the-art alternatives. Furthermore, we utilize GradGAM++ to qualitatively visualize the image regions where the network attends to. Our code is available at https://github.com/ai-kmu/Medical_CBAM_ViT.